Merriam-Webster’s word of 2025 was “slop.” Specifically, AI slop.

Low-effort, AI-generated content now fills social feeds, inboxes, and message threads. Much of it is harmless. Some of it is entertaining. But its growing presence is changing what people expect to see online.

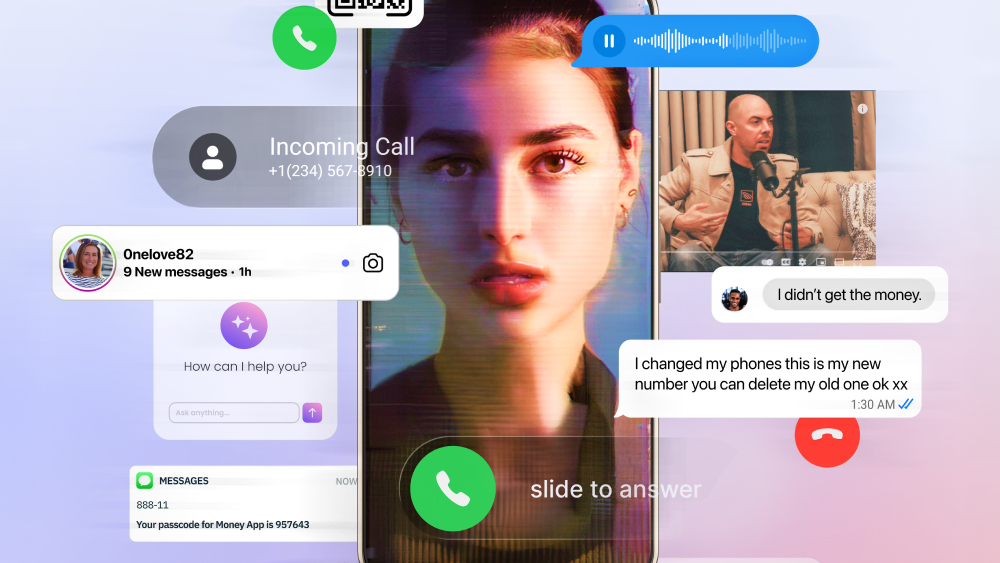

McAfee’s 2026 State of the Scamiverse report shows that scammers are increasingly using the same AI tools and techniques to make fraud feel familiar and convincing. Phishing sites look more legitimate. Messages sound more natural. Conversations unfold in ways that feel routine instead of suspicious.

According to McAfee’s consumer survey, Americans now spend an average of 114 hours a year trying to determine whether the messages they receive are real or scams. That’s nearly three full workweeks lost not to fraud itself, but to hesitation and doubt.

As AI-generated content becomes more common, the traditional signals people relied on to spot scams, such as strange links and awkward grammar, are fading. That shift does not mean everything online is dangerous. It means it takes more effort to tell what is real from what is malicious.

The result is growing uncertainty. And a rising cost in time, attention, and confidence.

The average American receives 14 scam messages a day

Scams are no longer occasional interruptions. They are a constant background noise.

According to the report, Americans receive an average of 14 scam messages per day across text, email, and social media.

Many of these messages do not look suspicious at first glance. They resemble routine interactions people are conditioned to respond to.

- Delivery notices

- Account verification requests

- Subscription renewals

- Job outreach

- Bank alerts

- Charity appeals

And with the use of AI tools, scammers are churning out these scam messages and making them look extremely realistic.

That strategy is working. One in three Americans says they feel less confident spotting scams than they did a year ago.

Figure 1. Types of scams reported in our consumer survey.

Figure 1. Types of scams reported in our consumer survey.

Most scams move fast, and many are over in minutes

The popular image of scams often involves long email threads or elaborate schemes. In reality, many modern scams unfold quickly.

Among Americans who were harmed by a scam, the typical scam played out in about 38 minutes.

That speed matters. It leaves little time for reflection, verification, or second opinions. Once a person engages, scammers often escalate immediately.

Still, some scammers play the long game with realistic romance or friendship scams that turn into crypto pitches or urgent requests for financial support. Often these scams start with no link at all, but just a familiar DM.

In fact, the report found that more than one in four suspicious social messages contain no link at all, removing one of the most familiar warning signs of a scam. And 44% of people say they have replied to a suspicious direct message without a link.

The cost is not just money. It is time and attention.

Financial losses from scams remain significant. One in three Americans report losing money to a scam. Among those who lost money, the average loss was $1,160.

But the report argues that focusing only on dollar amounts understates the broader impact: scams also cost time, attention, and emotional energy.

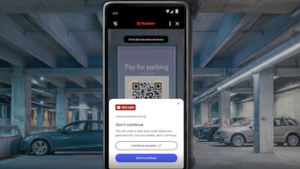

People are forced to second-guess everyday digital interactions. Opening a message. Answering a call. Scanning a QR code. Responding to a notification. That time adds up.

And who doesn’t know that sinking feeling when you realize a message you opened or a link you clicked wasn’t legitimate?

Figure 3. World Map of Average Scam Losses.

Why AI slop makes scams harder to spot

The rise of AI-generated content has changed the baseline of what people expect online. It’s now an everyday part of life.

According to the report, Americans say they see an average of three deepfakes per day.

Most are not scams. But that familiarity has consequences.

When AI-generated content becomes normal, it becomes harder to recognize when the same tools are being used maliciously. The report found that more than one in three Americans do not feel confident identifying deepfake scams, and one in ten say they have already experienced a voice-clone scam. Voice clone scams often feature AI deepfake audio of public figures, or even people you know, requesting urgent financial support and compromising information.

These AI-generated scams also come in the form of phony customer support outreach, fake job opportunities and interviews, and illegitimate investment pitches.

Account takeovers are becoming routine

Scams do not always end with an immediate financial loss. Many are designed to gain long-term access to accounts.

The report found that 55% of Americans say a social media account was compromised in the past year.

Once an account is taken over, scammers can impersonate trusted contacts, spread malicious links, or harvest additional personal information. The damage often extends well beyond the original interaction.

Scams are blending into everyday digital life

Scams are blending into everyday digital life

What stands out most in the 2026 report is how thoroughly scams have blended into normal online routines.

Scammers are embedding fraud into the same systems people rely on to work, communicate, and manage their lives.

- Cloud storage alerts (such as Google Drive or iCloud notices) warning that storage is full or access will be restricted unless action is taken, pushing users toward fake login pages.

- Shared document notifications that appear to come from coworkers or collaborators, prompting recipients to open files or sign in to view a document that does not exist.

- Payment confirmations that claim a charge has gone through, pressuring people to click or reply quickly to dispute a transaction they do not recognize.

- Verification codes sent unexpectedly, often as part of account takeover attempts designed to trick people into sharing one-time passwords.

- Customer support messages that impersonate trusted brands, offering help with an issue the recipient never reported.

Figure 4: Example of a cloud scam message.

The Key Takeaway

Not all AI-generated content is a scam. Much of what people encounter online every day is harmless, forgettable, or even entertaining. But the rapid growth of AI slop is creating a different kind of risk.

Learn more and read the full report here.

FAQ: Understanding the AI Slop Era and Modern Scams

| Q: What is AI slop?

A: The term refers to the flood of low-quality, AI-generated content now common online. While much of it is harmless, constant exposure can make it harder to identify when similar technology is used for scams. |

| Q: How much time do Americans lose to scams?

A: Americans spend 114 hours a year determining whether digital messages and alerts are real or fraudulent. That is nearly three workweeks. |

| Q: How fast do scams happen today?

A: Among people harmed by scams, the typical scam unfolds in about 38 minutes from first interaction to harm. |

| Q: How common are deepfake scams?

A: Americans report seeing three deepfakes per day on average, and one in ten say they have experienced a voice-clone scam. |