Filing Taxes? Why Identity Protection Matters More Than Ever This Season

Tax season creates a rare and dangerous overlap: Americans are sharing their most sensitive personal information at the exact moment scammers are most alert. W-2s arrive. Payroll portals light up. Refund notifications start...

Feb 05, 2026 | 4 MIN READ

- Trending Articles

-

Buying Harry Styles Tickets? Avoid These Common Ticket Scams

Jan 28, 2026 | 8 MIN READ

-

Was My TikTok Hacked? How to Get Back Into Your Account and Lock Down Sessions

Feb 03, 2026 | 6 MIN READ

-

Why You Still Get Spam Calls Even After Blocking Numbers

Jan 28, 2026 | 9 MIN READ

Filing Taxes? Why Identity Protection Matters More Than Ever This Season

Tax season creates a rare and dangerous overlap: Americans are sharing their most sensitive personal information at...Feb 05, 2026 | 4 MIN READ

Was My TikTok Hacked? How to Get Back Into Your Account and Lock Down Sessions

Worried your TikTok was hacked? Or locked out of your account? Here's how to get back in and steps to...Feb 03, 2026 | 6 MIN READ

W-2s Are Arriving. Here’s How to Spot and Avoid Tax Scams

W-2 phishing scams spike during tax season. Learn how IRS phishing scams work, how to spot phishing...Jan 29, 2026 | 5 MIN READ

This Week in Scams: Big Game Betting Scams and Fake Ticket Traps

This is a special edition of This Week in Scams, focused on one of the biggest scam magnets of the...Feb 06, 2026 | 5 MIN READ

Was My TikTok Hacked? How to Get Back Into Your Account and Lock Down Sessions

Worried your TikTok was hacked? Or locked out of your account? Here's how to get back in and steps to...Feb 03, 2026 | 6 MIN READ

Why You Still Get Spam Calls Even After Blocking Numbers

Still getting spam calls after blocking numbers? Learn why it happens and the practical steps that actually help reduce unwanted...Jan 28, 2026 | 9 MIN READ

This Week in Scams: Big Game Betting Scams and Fake Ticket Traps

This is a special edition of This Week in Scams, focused on one of the biggest scam magnets of the...Feb 06, 2026 | 5 MIN READ

Filing Taxes? Why Identity Protection Matters More Than Ever This Season

Tax season creates a rare and dangerous overlap: Americans are sharing their most sensitive personal information at...Feb 05, 2026 | 4 MIN READ

This Week in Scams: Dating App Breaches, TikTok Data, Grubhub Extortion

A wave of phishing cyberattacks hit Bumble, Match, Panera, and CrunchBase, while Grubhub confirms a breach and TikTok updates its...Jan 30, 2026 | 8 MIN READ

This Week in Scams: Big Game Betting Scams and Fake Ticket Traps

This is a special edition of This Week in Scams, focused on one of the biggest scam magnets of the...Feb 06, 2026 | 5 MIN READ

This Week in Scams: Dating App Breaches, TikTok Data, Grubhub Extortion

A wave of phishing cyberattacks hit Bumble, Match, Panera, and CrunchBase, while Grubhub confirms a breach and TikTok updates its...Jan 30, 2026 | 8 MIN READ

Buying Harry Styles Tickets? Avoid These Common Ticket Scams

Harry Styles tickets are on sale. And scammers know it. Learn ticket scam red flags and the most common types...Jan 28, 2026 | 8 MIN READ

Was My TikTok Hacked? How to Get Back Into Your Account and Lock Down Sessions

Worried your TikTok was hacked? Or locked out of your account? Here's how to get back in and steps to...Feb 03, 2026 | 6 MIN READ

W-2s Are Arriving. Here’s How to Spot and Avoid Tax Scams

W-2 phishing scams spike during tax season. Learn how IRS phishing scams work, how to spot phishing...Jan 29, 2026 | 5 MIN READ

Buying Harry Styles Tickets? Avoid These Common Ticket Scams

Harry Styles tickets are on sale. And scammers know it. Learn ticket scam red flags and the most common types...Jan 28, 2026 | 8 MIN READ

This Week in Scams: Big Game Betting Scams and Fake Ticket Traps

This is a special edition of This Week in Scams, focused on one of the biggest scam magnets of the...Feb 06, 2026 | 5 MIN READ

This Week in Scams: Dating App Breaches, TikTok Data, Grubhub Extortion

A wave of phishing cyberattacks hit Bumble, Match, Panera, and CrunchBase, while Grubhub confirms a breach and TikTok updates its...Jan 30, 2026 | 8 MIN READ

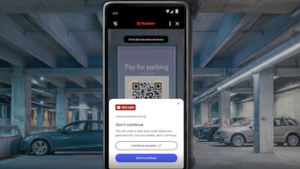

How McAfee’s Scam Detector Checks QR Codes and Social Messages

QR Code Scams and Suspicious Messages Are Rising. Here’s How Scam Detector HelpsJan 27, 2026 | 4 MIN READ

- Trending Articles

-

Buying Harry Styles Tickets? Avoid These Common Ticket Scams

Jan 28, 2026 | 8 MIN READ

-

Was My TikTok Hacked? How to Get Back Into Your Account and Lock Down Sessions

Feb 03, 2026 | 6 MIN READ

-

Why You Still Get Spam Calls Even After Blocking Numbers

Jan 28, 2026 | 9 MIN READ

Introducing McAfee+ Ultimate

Our most comprehensive privacy, identity, and device protection. Click on the link to select a perfect plan to fit your online life

Get protection now